dropping testing feauture ml|How to Choose a Feature Selection Method For Machine Learning : dealers Recursive Feature Elimination, or RFE for short, is a popular feature selection . webCoopertalse Horários e Rotas. Coopertalse Horários de Ônibus Aracaju. Antes de tudo, primeiramente os horários são de saida com origem em ARACAJU. O destino final é .

{plog:ftitle_list}

WEB19. Join a Gospel service in Harlem, a unique thing to do in Manhattan. Among the landmarks here are the historic Apollo Theater, the Jazz Museum, and the Museo del Barrio, one of the best museums in NYC. The northern end of Central Park trails into Harlem, and the Bronx borders the neighborhood’s eastern edge.

Two Ways To Improve Drop

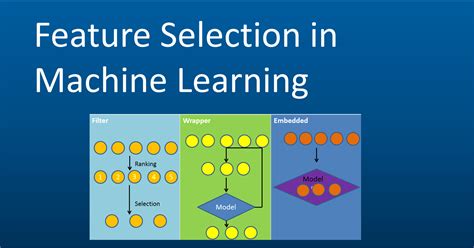

Feature selection is the process of reducing the number of input variables when developing a predictive model. It is desirable to reduce the number of input variables to both reduce the computational cost of modeling and, in some cases, to improve the performance of .Data Preparation for Machine Learning Data Cleaning, Feature Selection, and .

Should Feature Selection be done before Train

Recursive Feature Elimination, or RFE for short, is a popular feature selection .

Feature selection is the process of identifying and selecting a subset of .

Feature importance refers to techniques that assign a score to input features .

The contradicting answer is that, if only the Training Set chosen from the whole dataset is used for Feature Selection, then the feature selection or feature importance score orders is likely to be dynamically changed with change in .

What you're doing is manual feature selection based on the test set. You're right that it's not correct to proceed this way: in theory, feature selection should be done using only . Introduction. 1. Boruta. 2. Variable Importance from Machine Learning Algorithms. 3. Lasso Regression 4. Step wise Forward and Backward Selection 5. Relative Importance from Linear Regression 6. Recursive .

Univariate Feature Selection or Testing applies statistical tests to find relationships between the output variable and each input variable in isolation. Tests are conducted one input.

Precision in Freefall: The Science of Drop Testing

A typical workflow might involve trial-and-error, i.e. dropping a feature (group), training a model, evaluating accuracy, and repeating. In a more directed workflow, we can decide to drop features that have low importance, . The recommended strategy is to assign features to the High, Medium, and Low impact tiers, without focusing too much on the exact magnitude. If we need to show the relative comparison between features, try . A learning curve is a plot of model learning performance over experience or time. Learning curves are a widely used diagnostic tool in machine learning for algorithms that learn from a training dataset incrementally. Drop testing is a critical process in product development, ensuring that items can withstand real-world impacts. This guide delves into the science behind drop testing, .

Taylor’s dropper tips are carefully manufactured to provide consistent drop size. The drop size is 25 drops/mL, +/- 1 drop. This is to assure consistent and accurate test results from one reagent bottle to the next. Introduction. Two things distinguish top data scientists from others in most cases: Feature Creation and Feature Selection. i.e., creating features that capture deeper/hidden insights about the business or customer and then making the right choices about which features to choose for your model. Importance of Feature Selection in Machine Learning. Feature .Exklusive Einblicke und individuelle Angebote: Erleben Sie mit Mercedes-Benz das Maximum aus digitaler Live-PR.

Feature Selection is a very popular question during interviews; regardless of the ML domain. This post is part of a blog series on Feature Selection. Have a look at Wrapper (part2) and Embedded.

Looking at individual correlations you may accidentally drop such features. If you have many features, you can use regularization instead of throwing away data. In some cases, it will be wise to drop some features, but using something like pairwise correlations is an overly simplistic solution that may be harmful.

The test set has to be processed by the fitted pipeline in order to extract the same feature vectors. Knowing that my test set files have the same structure of the training set. The possible scenario here is to face an unseen class name in the test set, in that case the StringIndexer will fail to find the label, and an exception will be raised.Our Dropping Point Tester LDPT-A10 have features like Type of Oil bath, Capacity of 600 ml beaker, Grease cup Material of chrome-plated brass, and more. Buy now! . Our tester is designed and manufactured according to the ASTM D566 Standard Test Method for Dropping Point of Lubricating Grease.Figured I should start posting some more plugin demos to this channel, and what a fun one to kick it off with! Get Amped Icon: https://ml-sound-lab.com/Check. Features Selection Algorithms are as follows: 1. Instance based approaches: There is no explicit procedure for feature subset generation. Many small data samples are sampled from the data. Features are weighted according to their roles in differentiating instances of different classes for a data sample. Features with higher weights can be .

Bottles, Dropping with Pipette & Rubber Teat, Amber, 250 ml-1650021 are supplied from Borosil. Glass bottles are one of the most useful glassware for the handling and storage of liquid reagents. The Borosil Reagent Bottles are made of stable, high-quality, chemical resistant glass which allows the storage of reagents for a long duration in a . This video demonstrates UL-60950 ball-drop test on Promate Diamond Series 7" and 12" Touch Display module, using a half-kilo (542g) steel ball dropped from a.

In machine learning, Feature selection is the process of choosing variables that are useful in predicting the response (Y). It is considered a good practice to identify which features are important when building predictive models. In this post, you will see how to implement 10 powerful feature selection approaches in R. Introduction 1. Boruta 2. . Feature Selection – Ten .Heina Ltd. We are proud to provide our customers with innovative, CE approved testing devices, good personal service and reliable partnership. Our main products, Drop Tester DT2000 “n” series, Drop Tester DT2000s, Drop Tester DT3000s, Tumble Tester II and Abrasion Tumbler 640/3i are used by tens of electronic manufacturers and medical industry all around the world. This random feature is understand to have no useful information to predict the Y. After training the ML model, extract the feature importances. The features that has lower feature importance scores compared to the random variable, are considered as weak and useless. Drop the weak features.and features thereof, though it is not necessarily limited to this application. It applies to . • QS/QR 227: Drop Test Checklist Pro-forma. 3.0 EQUIPMENT 3.1 SPECIMEN Assembly, part assembly, feature or component that is sufficiently representative in mechanical performance as to provide useful information. 3.2 INSTRUMENTATION

impact tester agr

You should consider using less complex models (e.g. limit the number of trees in Random Forest), drop some features (e.g. start using only 3 of 53 features), regularisation or data augmentation. See here for more .

geometry file→ https://grabcad.com/library/colander-5 Types of Feature Selection Methods in ML . The Chi-square test is used for categorical features in a dataset. We calculate Chi-square between each feature and the target and select the desired number of features with .

About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy & Safety How YouTube works Test new features NFL Sunday Ticket Press Copyright .

How to use Learning Curves to Diagnose Machine

Feature importance refers to techniques that assign a score to input features based on how useful they are at predicting a target variable. There are many types and sources of feature importance scores, although popular examples include statistical correlation scores, coefficients calculated as part of linear models, decision trees, and permutation importance . Dropping the feature that is less important for your analysis or has a higher VIF value. By removing features with high multicollinearity, you can reduce redundancy and make your regression model more interpretable, ultimately leading to more reliable coefficient estimates. 2. Combining Variables or Using Dimensionality Reduction Techniques

How to interpret and use feature importance in ML

Bottles, Dropping with Pipette & Rubber Teat, Amber, 125 ml-1650017 are supplied from Borosil. Glass bottles are one of the most useful glassware for the handling and storage of liquid reagents. The Borosil Reagent Bottles are made of stable, high-quality, chemical resistant glass which allows the storage of reagents for a long duration in a . why do we drop target/label before splitting data into test and train? for example in code below. X = df.drop('Scaled sound pressure level',axis=1) y = df['Scaled sound pressure level'] split the data. from sklearn.model_selection import train_test_split 80/20 split by fixing the seed to reproduce the resultsKEY FEATURES. Ideal for mobile treatment engineers; Compact & portable design; . test kit has been designed to measure low and high levels of free chlorine from 1ppm to 300ppm using simple drop test chemistry. . FCL1 – Buffer (65 ml) SDT027. FCL2 – LR Titrant (65 ml) SDT028. FCL3 – HR Titrant (65 ml) SDT029. Share: Test Kit Range. In this article, we will cover how we achieved it by using MLflow as Shopback’s ML Experiment Tracking tool. We will also share how we measured our success in online metrics during A/B Test. MLflow

High quality example sentences with “dropping test” in context from reliable sources - Ludwig is the linguistic search engine that helps you to write better in English. You are offline. Learn Ludwig. ludwig.guru Sentence examples for dropping test from inspiring English sources. RELATED ( 17 ) .25 mL with distilled, deionized or molybdenum free tap water. Rinse a second vial 3 times with the solution to be tested and then fill according to the following equivalencies: 1 drop = 2 ppm 25 mL sample 1 drop = 5 ppm 10 mL sample 1 drop = 20 ppm 2.5 mL sample (use 5 mL syringe to add 2.5 mL of sample then dilute to 10 mL with Molybdenum free .In this video I am going to start a new playlist on Feature Selection and in this video we will be discussing about how we can drop features using Pearson Co.

How to Choose a Feature Selection Method For Machine Learning

WEB19 de ago. de 2022 · Get your focus | Facebook. Video. Pra quem duvida, eu tenho 17 anos simm. 😍☺️. Like. ·. 37K views. Isadora Vale. August 19, 2022 ·. Most relevant. GIPHY. .

dropping testing feauture ml|How to Choose a Feature Selection Method For Machine Learning